City leaders can help as AI linked to rise in Internet Crimes Against Children

By KATE COIL

TT&C Assistant Editor

With tablets, laptops, phones, and gaming systems under the Christmas tree, law enforcement officials are asking parents, teachers, and other mandated reporters to educate themselves about the rising risk of Internet Crimes Against Children (ICAC), especially though the use of AI.

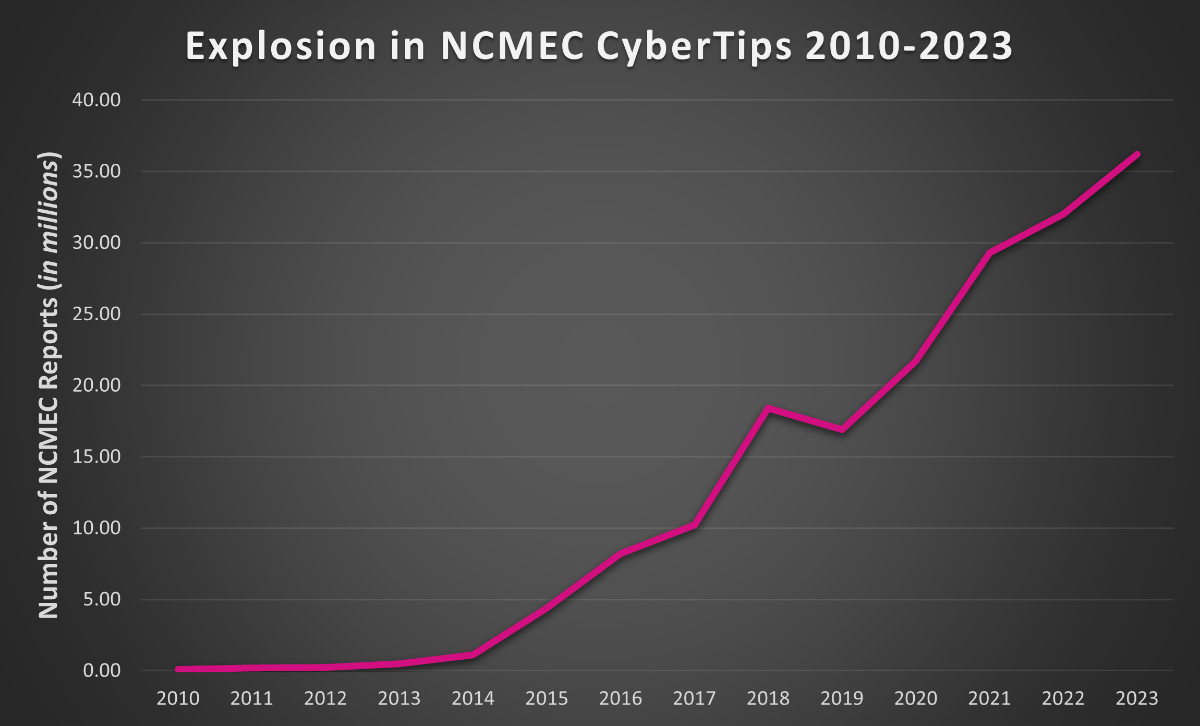

The National Center for Missing and Exploited Children (NCMEC) reported a 300% increase in reports of online exploitation of children between 2021 and 2023, an explosive increase verified by the UN and other agencies. Robert Burghardt, TBI Assistant Special Agent in Charge with TBI’s Cybercrime and Digital Evidence Unit, works with Tennessee’s Internet Crimes Against Children (ICAC) Squad based at the Knoxville Police Department. The task force brings 72 affiliates from municipal, county, and state government together to investigate these crimes.

While some departments have their own in-house departments or officers to deal with this work, the task force works with other local law enforcement to investigate ICAC cases and make arrests. Since he started with the unit in 2017, Burghardt said the unit has gone from seeing 30 cases a month to over 100 a month in 2024. The types of crimes and the profile of both perpetrators and victims are also changing.

“Coming into it, this seemed like a white male crime, but now it’s just anybody,” he said. “We’ve arrested teachers, police officers, pastors, and there is really no certain type of criminal. Sextortion is also becoming huge post-pandemic, and that mainly focuses on 14-17 year-old boys because boys are more apt to send out nude photographs than a female. Of all the CSAM [child sexual-abuse material] in the world, the majority are still girl victims because it’s usually a male crime, but needless to say, there are tons of male victims out there, too.”

While companies are getting better at finding and reporting inappropriate images on servers, the fact that more people are on the internet and have more ways of accessing the internet also makes it easier for predators to connect and groom victims. Burghardt said predators know where to go to meet underage victims online and will often convince victims to follow them to another site before exploiting them.

The advent of AI is also changing the landscape of ICAC, and Burghardt said the majority of new photos he is seeing have used some AI technology.

“A lot of the AI cartoonish pictures we see are infants, and a lot of the AI-created pictures we are seeing you can’t even tell that it is a fake photograph,” he said. “That is how good the technology is getting. I do a lot on the dark web, but I’ve even noticed AI sites on the clear web where these guys are online all day, every day creating CSAM. They are literally bragging about what they created with each other in chatrooms.”

AI has also added frightening new capabilities for predators, allowing them to use seemingly innocent photos for dark purposes. While before a predator might solicit a naked photo, Burghardt said AI capabilities mean this step is no longer needed.

“It’s so easy now; you just click a button and can turn anyone nude,” he said. “Know whatever your post on the Internet is out of your hands, and people can do whatever they want with that photograph. If you just Google AI to nude photos, there are tons of sites you can subscribe to. Some even have where you can throw a link to Instagram account in, and it will generate photos from that account. I can go on your Facebook page, grab a picture of your child – whether it's an infant or a 17-year-old – and instantly turn that into an AI image where I can put that child’s head on a photograph of CSAM.”

The ability to create these images from ones innocently posted online is driving the rise in sextortion reports.

“They don’t even have to reach out to a kid now,” he said. “They will randomly find a kid online, find a photograph of that kid, make a nude image, and then reach out to the kid and say ‘hey, I’ve got your nudes.’ The kid never did anything. They then threaten to send that photo to all his friends and family, and these kids feel trapped. They don’t know who to turn to or who to tell. They don’t think anyone will believe they didn’t take that photo.”

Once online, an image there is forever, and once posted, an image may circulate for decades. NCMEC said that less than half of exploitive still images and less than a quarter of videos on the web are unique, meaning discovering a unique image may mean a child is presently in danger.

“Unfortunately, those photos will still be circulating long after we’ve passed away,” Burghardt said. “When NCMEC reports a tip, they will mention if they have never seen that photo before. That means there is a new victim out there we have to identify. When that happens it's a priority, and we put everything down to start working that case over any other. We will then report it back to NCMEC.”

AI technology is also being used to combat CSAM production. Those catching predators are also utilizing AI technology, both to identify children in images and to trick predators into think they are talking to children.

“Whatever the bad guys are using, law enforcement is using too,” Burghardt said. “If I want to pose undercover, I may take a photo of myself and make myself into a ten-year-old.”

At present, Burghardt said there is a difference in how AI-generated images are prosecuted. Taking a picture of a child in-person is production of child sex abuse material, but work is presently being done to add AI-image generation under the definition of creation. Burghardt encouraged any local officials or residents who want to back such a bill to contact their state legislators and voice their support.

“The only way you are going to defeat this entire issue of exploitation of children is education; we can’t arrest our way out of it,” Burghardt said. “If you can stop the communication part, you will get rid of a lot of the crime. Even if a local police department or school doesn’t have a lot of education themselves on this, they can go to NCMEC’s website and download free presentations and materials to educate teachers, kids, parents, and the public.”

Burghardt said he would like to see more of these materials out in public, to help educate and start conversations and to encourage children who may have been victims to contact those who can help. By putting up a NCMEC flier in a bathroom at a school, library, or public park, children who may be hesitant to talk to their parents, guardians, or other authority figures about being exploited can make reports directly to NCMEC.

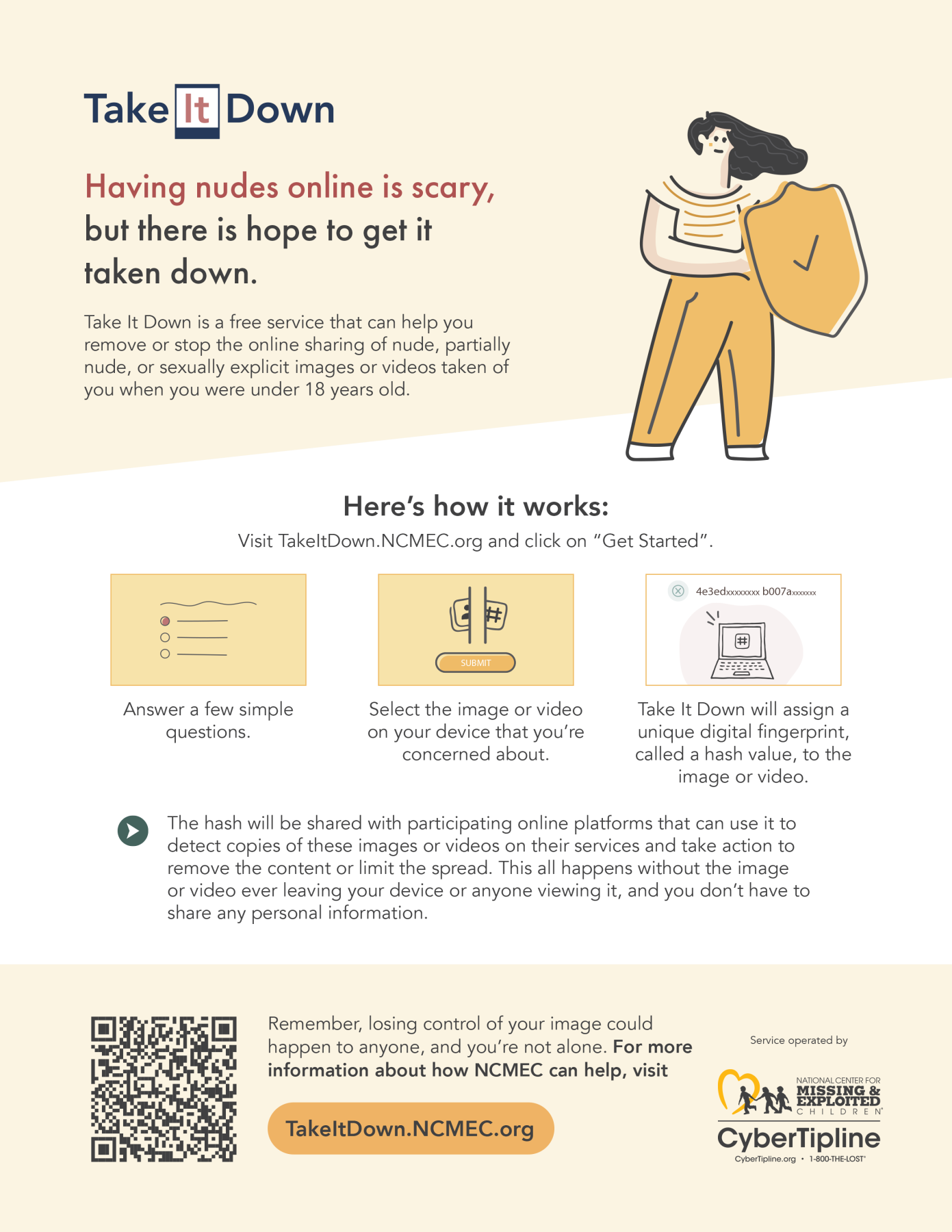

“The overall topic is taboo,” he said. “Everyone knows about it, but no one wants to talk about it because it is uncomfortable. Even if a library or a school goes to NCMEC’s website and prints out one of their fliers to put up in the bathroom it can help. They have a flier and a website called Take It Down where if a child has a picture or video they are worried about being out there, they can go to that website, answer a few questions, and without the image leaving their laptop or phone, the app searches across the Internet to find if that image is there. The company is then notified to take the image down. I would like to have one of those fliers in every single school bathroom stall so kids can secretly scan QR codes without everyone knowing.”

With more ways for children to access the internet, Burghardt said parents have to stay vigilant with their children’s devices and online time. He recommends parents know what games their kids are playing, find out what features those games have for themselves to see if they have chat functions, and put rules in place. He also recommends making sure children are following these rules.

“Parents have to be parents,” he said. “People worry about taking their kids’ phone and ruining their privacy, but at the end of the day, that is your phone not your child’s. When we do a search warrant where a kid is involved, we don’t ask the kid if we have permission to take their phone; we ask the parent. I think in society alone a device is an easy, quick babysitter, and even I’m guilty of it. For a lot of kids, their device and the internet is their world. It’s how they communicate to their friends. Just be aware of what is going on.”

He also recommends that children only use electronic devices in family or common areas and not while alone in the bedroom or bathroom. Burghardt said 90% of images he sees taken by a child is done in a bedroom or bathroom. He also recommends instituting rules that ban devices after dinner or at bedtime.

Tennessee Attorney General Jonathan Skrmetti led 31 state attorneys general in a letter to Congressional leadership urging them to pass the bipartisan Kids Online Safety Act (“KOSA”), crucial legislation protecting children from online harm, before the end of the year.

The coalition letter emphasized the urgent need to address the growing crisis of youth mental health linked to social media use, with studies showing minors spend more than five hours daily online. One provision of the act would be providing parents with new tools to identify harmful behaviors and improved capabilities to report dangerous content.